Downside protection when running growth experiments.

"Rule No. 1: Never lose money. Rule No. 2: Don’t forget rule No. 1." - Warren Buffett

I'm in the middle of a months-long deep dive into value investing. For those unaware with this strategy, the most important thing you should know is that investors seek to identify and invest in companies that are undervalued by the market.

For example, let's say I go to a yard sale and buy an unopened Pez dispenser for $25. I was just looking at the same Pez dispenser that's selling like hotcakes on EBay for $200. The market is valuing the dispenser at $200 and I'm purchasing it for $25. That's a difference of $175, which is pretty awesome.

A key tenant of value investing is to protect against the downside (unexpected events that lead to a drop in price). In the Pez example, let's say the market determines that $200 is too expensive, the dispenser should be $150 instead. The good news is that I still have enough margin of safety, so I'm still able to make a profit of $125 when I decide to sell.

This isn't rocket science, but I'd like to explain why downside protection matters when running growth experiments. In addition, I'll share some tactical things you can do to protect against unforseeable consequences.

1. Create a firm foundation

In value investing, investors tend to outline their reasons for why a business may be undervalued by the market. This is primarily comprised of financial metrics like revenue, profit, market cap, and other things you probably don't care to learn about right now. In addition, there's usually some qualitative reasons for why a business might be undervalued, which typically revolves around projections related to the growth of a market in X years (or another ambiguous factor).

A smart investor will create a foundation for their decision-making process that can be easily deduced by someone else.

How this applies to growth experiments

This process of "showing your work" can help align coworkers around why an experiment deserves to be worked on now vs. later (prioritization). In addition, when you provide a source of truth for your decisions (both quantitative and qualitative data from customers), there's fewer disagreements around what problem you are trying to solve.

By far the biggest bottleneck I've seen on growth teams is that they don't create a firm foundation for the experiments that they run. Ideas & experiments are generated out of thin-air. This is why I recommend having a section in every experiment that oulines why the experiment exists in the first place.

If you create a firm foundation for your experiments, when things go wrong you can easily outline your decision-making process and course-correct if needed. Show your work!

2. Diversify

The next strategy to limit your downside when running growth experiments is to diversify the type of experiments you run (based on risk). There are certain experiments which are riskier than others. You should consider the following factors:

- Time investment (engineering resources needed, etc)

- Scope of change (is it a smaller tweak or a massive project)

- Amount of visibility (how many people will interact with X)

- Time to achieve "significance" (how long until you pick a winner?)

From my perspective, the key takeaway in this section is that you should create a balanced "portfolio" of experiments to work on. If you are only working on massive, time consuming projects, you should consider adding smaller, less ambiguious projects. This is especially helpful as I've seen teams burn out when they only work on tiny changes (or massive ones). Create the healthy balance!

3. Document downstream effects

This next topic is something I wish growth people discussed more. In systems thinking, there's this concept of a negative feedback loop (or balancing loop) outlined below:

"Often in a balancing feedback loop, there is some sort of implicit or explicit goal. When the distance between your car and the car in front of you increases you accelerate, closing the gap. When the distance between you and the car becomes less than your desired distance (you’re to close) you will decelerate, either letting the friction of the road slow you down or applying the breaks." source

In growth, experiments are centered around the notion of cause/effect. "If we change X, Y will happen." It might look like this:

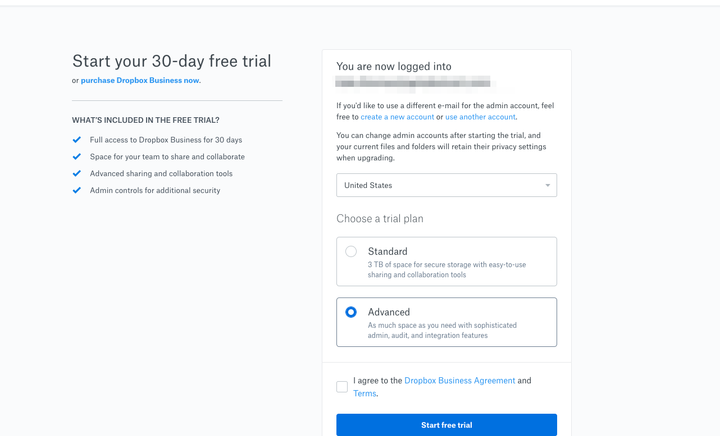

"If we decrease the complexity of our signup form, it will increase the number of people who signup."

What these experiments rarely factor in are downstream effects. These are unintended consequences that result from the system being interconnected.

In the example above, the hypothesis is that reducing the complexity of the signup form will increase conversion, which may be true. However, what happens if the new signups perform key actions at a less frequent basis because they aren't as engaged? This stuff happens ALL THE TIME.

Have a discussion

I'm not sure the best process for documenting downstream effects, but the most obvious way to help this issue is by talking about the potential side effects of the experiments that you run. The experiments you run are part of an interconnected system :)

In Conclusion

I don't mind risk, but minimizing your downside as much as possible can help you avoid a lot of pain.